Power-hungry data centers and HPC systems adopt innovative, environmentally-friendly solutions

Now that we’ve entered the era of exascale high-performance computing (HPC) systems, component makers are set on a new target: Zettascale. Getting there means components like processors, GPUs, fabric, and storage solutions must continue their brisk development pace and overcome bottlenecks that impede the delivery of the necessary speed and scale. An even more significant challenge involves meeting the electrical demands of coming HPC systems and data centers in a sustainable way. Some exascale systems already have energy requirements akin to an entire town. However, that requirement will grow dramatically over time. During May 2022’s International Supercomputing Conference, Intel’s Jeff McVeigh noted that future computing infrastructure will use between 3 and 7% of global electricity production by 2030.

Improving cooling systems

One of the power-eaters involved in data center infrastructure is cooling. In past years, air cooling through heat sinks and fans could keep processors within ideal operating temperatures. In the future, these legacy approaches will be unable to keep up. We already see some very interesting approaches for heat dissipation while consuming less electricity in the process. For example, Microsoft announced success with its prototype underwater data center. Because it was built into a sealed container, the system could submerge many fathoms deep to make the most of the ocean’s temperatures for cheap, natural cooling. As a bonus, because the system remained vibration-free and in a fully-controlled environment, the server gear inside demonstrated greater longevity than hardware in a typical land-based data center.

In another scenario, High-Performance Computing as a Service (HPCaaS) provides cloud instances for advanced workloads instead of HPC infrastructure on-premise. HPCaaS instances hosted at atNorth take a multi-tiered approach to sustainability. Low ambient temperatures can assist in the cooling process by building data centers in Iceland. Also, Iceland offers several sources of green power from geothermal and hydroelectric processes. The combination helps atNorth data centers consume less electricity, and what they do consume is plentiful, inexpensive, and renewable.

We also see cases in which heat produced from data centers is re-directed for alternate uses. For example, several data centers in Stockholm use surplus heat to warm buildings. A different data center transfers excess heat to nearby greenhouses to help grow food for the local population.

Immersion cooling approaches

Making in-house cooling systems more efficient remains a high priority for those data centers without the ability to embrace solutions like the above to cool accelerator cards and processors’ cores. Liquid cooling approaches are stepping up to help address that need.

Today, three types of liquid cooling are in various stages of usage and development. These include liquid-assisted air cooling, immersion, and direct-to-chip recirculation. The most straightforward of these options is the liquid-plus-air approach. Water-filled heat sinks connect to a server rack, dissipating heat through a specialized radiator. In direct-to-chip cooling methods, a server rack has one or more pumps that drive cooled water into a jacket atop a processor. When warm water exits the jacket, tubes guide the liquid back to the rack’s cooling area. Since it’s a closed system, the same water is used over and over, making the process more environmentally friendly.

In contrast to this approach, some OEMs design servers for immersion cooling. The process involves submerging a sealed server system into a “bathtub” of coolant. With “single phase” immersion systems, the coolant remains in the tub as a liquid. The warmer fluid rises to the top of the tub, where pumps siphon it off for cooling. The cold water is then re-introduced into the tank. “Two-phase” immersion cooling systems use engineered fluids that exist in a liquid state while cold and convert to gas when warm. The warm gas is removed from the tub, cooled to return to a liquid form, and pumped back into the tank. However, this approach requires a careful selection of fluids to ensure effectiveness with little-to-no impact on the outside environment.

Engineered fluids must demonstrate thermal efficiency without detrimental side effects. Companies like Green Revolution Cooling (GRC) and Submer lead the charge for immersion cooling. Novel approaches are in the proof-of-concept stage, and others are ready for deployment in production environments. Optimized systems like these can cut an HPC system’s carbon footprint and cooling expense by 40% and 90%, respectively. Submer’s pilot program with Intel makes important strides.

With liquid cooling techniques, data centers are much quieter since they do not need fans. Plus, HPC systems gain power efficiency since the available electricity can apply toward workloads – or other uses — rather than be diverted to the cooling process. For example, with the aid of the Hewlett-Packard (HP) team, the Peregrine HPC system at the National Renewable Energy Laboratory (NREL) uses its heated water to warm nearby Energy Systems Integration Facility (or ESIF) buildings.

Today, organizations rate their data center’s power efficiency with a Power Usage Effectiveness (PUE) ratio. A fully optimized system would have a PUE of 1.0. The industry is already seeing extremely efficient liquid cooling approaches that achieve PUE numbers in the 1.05 range.

Making component manufacturing greener

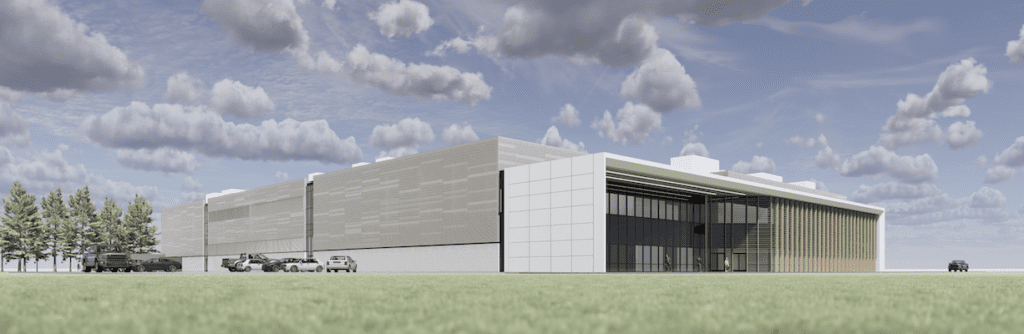

Of course, data and or HPC systems components need to be manufactured en-masse. Companies like Intel made a commitment to sustainability and seek ways to make their fabs and manufacturing plants more environmentally friendly. In Oregon, Intel’s new 200,00-square-foot facility – built for $700 million – will further research on cooling and sustainable water usage. Already, Intel introduced the first open intellectual property (open IP) reference design for liquid cooling solutions. Currently, Intel purchases 82% of its power from renewable energy sources and appears on track to meet its goal of using 100% renewable power in its factories, data centers, and facilities.

Other OEMs and hardware manufacturers also make essential strides to enable green computing. By finding new ways to make hardware components more recyclable and durable, the parts have a longer functional life and stay out of landfills. Intel also works with OEMs to reduce their carbon footprint through “chiplet” component designs. As chiplets, accelerator modules and processors can be smaller and optimized for specific workloads. Therefore, they require less carbon to manufacture, are more energy-efficient, and can be upgraded or repaired to extend their service lifespan.

AI is also getting into the act of making more earth-friendly components. Semiconductor manufacturers like AMD, Intel, and Nvidia tap AI to guide efficient processor design. The powerful combination of HPC and AI can identify designs worthy of consideration, so fewer physical prototypes are needed.

New technologies built with energy efficiency in mind

Data movement requires energy, so there are other physical ways to minimize the amount of electricity consumed by an HPC system during that process. Even minor design improvements – offering a nanowatt or nanosecond benefit –can add to significant energy savings. Near-Memory Computing (NMC) is an example of an older concept that’s getting new attention today from engineers. By reducing the physical distance data travels between memory and processors, data takes less energy to move. Beyond the hardware challenges of NMC, there’s a software component, too. Developers must overcome challenges like creating code to enable a heterogeneous computing implementation so the processor and an accelerator can divide the workload appropriately. Several vendors offer AI acceleration on-chip based on the NMC concept of data movement. Others utilize spatial and temporal caching technology to place commonly-used instruction sets to the memory blocks closest to the processor.

Greater I/O efficiency also helps reduce energy consumption. High Bandwidth Memory (HBM) offers another way to move data to CPUs and GPUs through stacked memory technology. Some HBM solutions can reach speeds close to that of RAM on-chip. The standard for HBM3 was published in January 2022 by JEDEC and seeks to deliver up to 819 GB/s and 32 Gb of density per device with a stack of up to 16 layers.

Other new approaches help accelerate data movement between the on-chip die and off-chip connections. Intel’s Foveros Direct is a hybrid bonding approach that eliminates soldered connections and replaces them with fused copper “bumps” smaller than ten microns. This approach can deliver an order-of-magnitude density improvement for interconnecting 3D stacking.

We’re likely to see more experimentation – and hopefully implementation – of photonics in the future. Photonics can offer tremendous speed and energy efficiency benefits since data will transfer via light rather than physical connections.

Open-source efforts

The open source community is also making vital strides to reduce HPC and data center energy consumption. For example, Compute Express Link (CXL) initially developed by Intel, is an open, standards-based built upon PCI Express Gen 5 technology. CXL seeks to improve memory coherency and data transfer speed between processors, GPUs, and other accelerators. Among consortium, supporters are companies like Cisco, Facebook, Google, and Microsoft.

The Open Compute Project (OCP) is making significant strides, too. Intel and other data center partners advance OCP’s focus on modular designs and reduced carbon footprint metrics. The Blue Glacier project, through OCP, published a Revision 1 specification for modular design. Intel also made the OneAPI open toolkit available. It can help developers reduce the power requirements of many workloads in AI and HPC.

While the above measures will not entirely solve data center and HPC systems’ thirst for electricity, the combined steps can make a significant difference toward greener computing.

Rob Johnson spent much of his professional career consulting for a Fortune 25 technology company. Currently, Rob owns Fine Tuning, LLC, a strategic marketing and communications consulting company based in Portland, Oregon. As a technology, audio, and gadget enthusiast his entire life, Rob also writes for TONEAudio Magazine, reviewing high-end home audio equipment

This article was produced as part of Intel’s editorial program, with the goal of highlighting cutting-edge science, research, and innovation driven by the HPC and AI communities through advanced technology. The publisher of the content has final editing rights and determines what articles are published.