Posted on behalf of Ralph Biesemeyer, Intel Global Marketing, Product Sustainability Strategist

Over the past several years at Intel, my job role has focused on developing Intel®Select Solutions. The work is both exciting and challenging because our top goal is making our customers successful with the solutions we create. At the same time, we believe Intel has a responsibility to keep honing our components to reduce their energy consumption in HPC and data center deployments. As systems get more powerful, they generally consume more energy and generate heat that must be managed effectively. In this blog, I’d like to share some creative approaches to this challenge and what Intel is doing to make our solutions more environmentally friendly.

Making cooling more efficient

We are reaching an inflection point where compute density, core counts on processors, and other components like accelerator cards, require a great deal of power to operate effectively. In the past, air cooling using fans and heat sinks mounted on processors could keep them within acceptable operating temperatures. Today, though, the challenge is bigger. Modern processors are generating more heat. As they get more and more powerful, air cooling struggles to keep up. Therefore, the air-conditioned rooms housing large data centers benefit from alternate liquid cooling approaches.

The industry adopts several approaches to liquid cooling today: Liquid assisted air, direct-to-chip recirculation, and immersion. The liquid-assisted air method is the least complex and operates as a radiator does. Servers are fitted with heat sinks that contain water and pipes to a radiator-like device in the server chassis. Direct to the chip is like liquid-assisted air deployed at the rack level. Water is pumped into a jacket sitting on a CPU or an accelerator. The water gathers the processor’s heat, and then the water departs the jacket, exits the rack, and then it’s cooled and recirculated. In contained systems like this, the same water is used repeatedly. Plus, it’s far more effective in cooling than air, circulated by facility fans and airflow-only heat sink-based approaches.

Immersion cooling works differently. As the name implies, systems are submerged in liquid. In the simplest terms, a rack is positioned inside a tub full of engineered coolant. But a little conversion must be done to each server beforehand. Operating system and BIOS adjustments must tell the system not to activate any fans since they are unnecessary. Some OEMs design servers for immersion that can eliminate fans because thermal management is done by the tank, simplifying the server design.

Immersion tanks take two different approaches in how they dissipate heat. With “single phase” systems, coolant remains in the tub in a liquid form. Heated fluid is a little less dense than cold fluid, so it naturally rises to the top of the tub. From there, pumps skim off the warmest liquid. Once cooled, it’s pushed back into the bottom of the tank.

“Two-phase” immersion cooling works differently. Engineered fluids used in the process remain in a liquid state when cool, but they turn into gas when heated by a server component, and that gas rises to the top of the sealed tank. The gas is then cooled, it becomes liquid again and is returned to the tank. One challenge is ensuring an engineered fluid is both effective and environmentally friendly.

With efficient immersion cooling, processors can operate at higher clock speeds without overheating. Therefore, it’s possible to improve system performance because available power can be allocated to actual workloads rather than being spent on heat removal. And there’s a bonus – liquid cooling is a lot quieter than fans.

Data Center efficiency is measured by Power Usage Effectiveness (PUE). PUE is a simple calculation that determines a data center’s energy efficiency. The goal is to get a PUE as close to 1.00 as possible, which indicates a fully optimized system. With immersion cooling, we’ve seen data center PUE numbers between 1.03 and 1.05. So that’s a great result, and we’ll continue striving to do even better.

We’re excited about the future potential for new engineered fluids that may increase thermal efficiency without any unwanted side effects. Green Revolution Cooling (GRC) and Submer are leading immersion technology vendors developing methods for immersion cooling. Advanced liquid cooling technologies are currently in various stages of proof-of-concept and production deployment. We anticipate that this approach can reduce cooling electricity expenses by 90% and the carbon footprint by 40%. You can read more about Intel’s pilot with Submer here.

Intel also announced plans to invest more than $700 million in a 200,000-square-foot lab in Oregon. The team there will focus on capabilities related to heating, cooling, and water usage. We also introduced the first open intellectual property (open IP) immersion liquid cooling solution and reference design.

Creating and using renewable energy sources

There’s no easy way around the energy demands of HPC systems and data centers. However, there’s plenty we can do to make powering them “greener.” The top-level goal is reducing or eliminating fossil fuel-based energy sources. Intel sees several creative ways our customers can join us toward that common goal.

Some data center architects take creative approaches to make the most of sustainable energy. One Intel customer, atNorth, hosts HPC as a Service (HPCaaS) instances that allow customers to run their advanced workloads in the cloud rather than using on-premise HPC infrastructure. Because atNorth’s data centers reside in Iceland, the company can embrace the cooling benefits of chilly local temperatures averaging five degrees Celsius. Plus, Iceland is known for its abundant hydroelectric and geothermal energy sources, so atNorth makes the best use of local, low-cost electricity.

Another company’s data center uses its immersion cooling systems to harvest and re-direct heat for other purposes. Like atNorth, they placed their data center in a region with a colder climate so that Mother Nature could assist with cooling. However, the company takes the idea of renewable energy even further. The heat from the data center is routed to greenhouses on the property to help grow food. Another innovative idea using “waste heat” was implemented at a data center in Stockholm. The excess heat is used to warm apartment buildings.

The National Renewable Energy Laboratory’s (NREL) Peregrine HPC system also employs liquid cooling for greater energy efficiency. NREL teamed up with Hewlett-Packard (HP) to build the energy-efficient supercomputer, which uses the hot water to heat the Energy Systems Integration Facility (or ESIF) buildings.

Another unique approach to storing sustainable power doesn’t only involve data lakes; it uses real lakes for a process called Pumped Storage Hydropower. When renewable power like solar is in surplus, the energy is used to pump water from a lower lake to a lake at a higher elevation. When renewable power is needed, the water from the higher lake passes through turbines to generate electricity as it descends to another lake at a lower elevation.

I love these stories because they demonstrate how creatively data center and utility companies can use renewables and unwanted heat energy to benefit people and our environment.

Industry commitment to sustainability

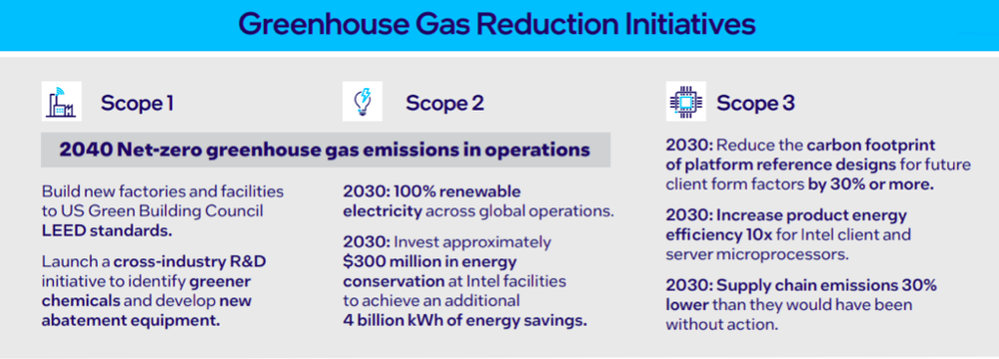

Intel has made vital commitments to sustainability, and it’s one of the things that makes me proud to work here. I’ll touch on a few of the approaches we are taking.

First, we need our facilities and fabs to use more renewable power. You can find out how we’re doing that at our newest facilities in the U.S. and Europe. Intel is currently buying 82% of its power from renewable sources worldwide. We are approaching our goal to use 100% renewable power in our factories, data centers, and facilities.

Secondly, we need to continue our efforts to make our products more energy-efficient. Estimates suggest that by 2030, three to seven percent of global energy will be gobbled up by data centers – and that number will continue to grow unless technologies can work harder while using less power. Therefore, we must figure out ways to coax greater performance from each watt of energy and reduce the “energy tax” required in data movement.

Third, we continue working with our OEM partners to make servers more renewable. No electronics can last forever, but if our customers get longer-term use from our products, we can reduce – and hopefully eliminate – the number of old parts in landfills.

Along with key data center partners, Intel contributes to the Open Compute Project (OCP). OCP’s focus is advancing modular designs, establishing carbon footprint metrics, and more. The most recent advancements are specified in the Blue Glacier project, which published a Revision 1 specification for modular design through OCP. We also offer the Intel OneAPI open toolkit, which can help developers reduce the amount of power needed to run many HPC, AI, and other workloads.

Another way Intel seeks to reduce the carbon footprint at a platform level is through modular or “chiplet” component design. The chiplet approach means processors and accelerator modules can be repaired or upgraded independently to extend the useful life of the platform. Chiplet components can also be purpose-built for custom workloads to optimize power consumption in a smaller physical package that requires less carbon.

There are several additional ways Intel and open-source technologies will reduce power requirements for HPC. I’ll share a few examples. First off, HPC systems often run their CPUs at 100% capacity. By making the most of accelerators built into our Intel® Xeon® Scalable processors, we’ve achieved significant performance increases in several types of workloads. AI accelerators and Intel AVX-512, for example, place high-demand tasks nearer to the processor to save power during the data transfer process. We also seek to reduce electricity consumption using AI-based telemetry that monitors and controls electricity throughout a data center’s network. The processors feature registers for monitoring cache, CPU frequencies, memory bandwidth, and input/output (I/O) access, so the telemetry capabilities can potentially offer significant energy savings at scale.

We’re also implementing new concepts that speed data transfer among the on-chip die and the connections off-chip. Intel’s Foveros Direct is a hybrid bonding approach that replaces soldered connections with fused copper-to-copper connections. By fusing tiny copper bumps, less than ten microns each, the innovation helps move data much more rapidly while using less energy.

While the above approaches do not eliminate the power consumption challenge, they help reduce the problem. Small gains compounded over time can have a much larger impact.

Faster innovation is better for the environment.

Intel’s work is founded atop Moore’s Law. But most people have not thought of Moore’s Law as driving sustainability over the last five decades. Now we are finding ways to get better performance from built-in accelerators that use fewer resources. Technologies like photonics have the potential to eliminate wired electrical connections and replace them with data transmission through light. Photonics could help reduce power consumption dramatically while making HPC systems quicker.

So, if anything, I think industry sustainability initiatives have the potential to drive Moore’s Law further, so we can do more with less!

The industry has come a long way, and I’m proud to play a part in Intel’s leadership initiatives in sustainable computing. By researching new and creative ways to cool servers, harvest “waste” heat, improve manufacturing processes, and design products to be more energy-efficient, we have the potential to drive positive environmental change around the globe.

Notices & Disclaimers

Performance varies by use, configuration, and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

For workloads and configurations visit www.Intel.com/PerformanceIndex. Results may vary.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Your costs and results may vary.

Intel technologies may require enabled hardware, software, or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.