Published July 26th, 2021

Photo credit: Laurent stock.adobe.com

Gadi Singer is Vice President and Director of Emergent AI Research at Intel Labs leading the development of the third wave of AI capabilities.

Rethinking knowledge layering and construction for long-term viable AI

Intelligence, be it human or machine, is based on knowledge. Finding the right balance between the effectiveness of intelligence (what it can achieve) and its efficiency (how much it costs in energy or other currency), is essential. In my WAIC21 keynote presentation I introduce Cognitive AI and propose that humans and intelligent machines apply a principle based on Three Levels of Knowledge — 3LK — namely instantaneous, standby, and retrieved external knowledge, due to factors of scale, cost, diversity of tasks, and continuous adaptation. Thrill-K will be introduced as the AI systems architecture blueprint that implements 3LK principles for next-generation AI.

This last decade has been a time of deep learning (DL) with algorithmic changes including the implementation of deep neural networks (DNN), long short-term memory (LSTM), and of course, Transformers. We have also seen innovation in frameworks like TensorFlow, PyTorch, and others that drove new hardware like GPUs or CPUs with special processing units and neural network (NN) processing units. DL has served as a viable technology and will soon be applicable in segments such as transportation, financial services, and infrastructure.

Despite all the great achievements and potential of DL, the next generation of architecture for more advanced artificial AI is fast approaching. By 2025, it is likely that we will see a categorical jump in the competencies demonstrated by AI, with machines growing markedly wiser. Change is needed, however, to address some of the fundamental limitations, as explained in my last article, “The Rise of Cognitive AI.”Here, I will explore a new architectural approach for knowledge integration into AI systems that can help mitigate some of the fundamental limitations of today’s AI systems.

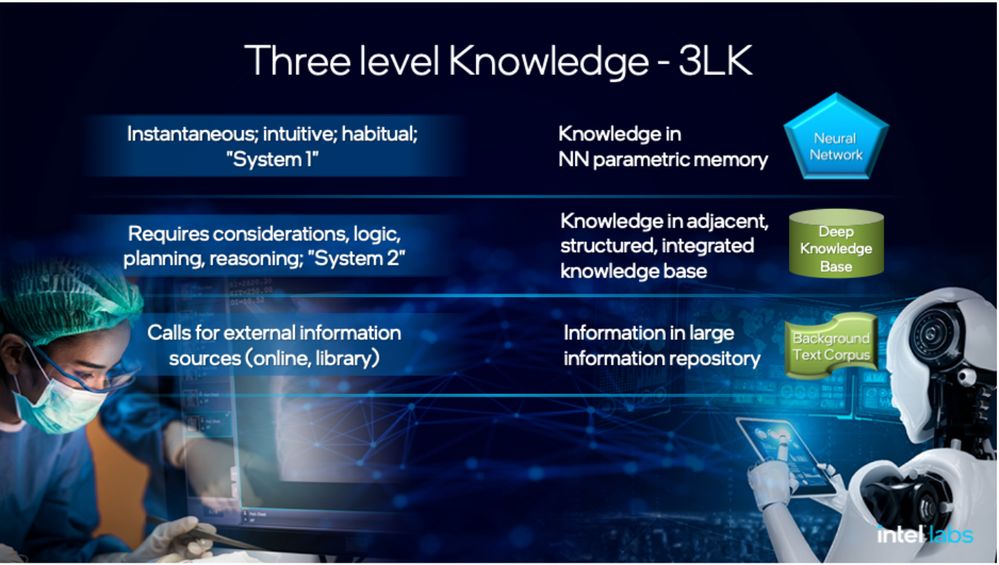

Three Levels of Knowledge

Living organisms and computer systems alike must have instantaneous knowledge to allow for rapid response to external events. This knowledge represents a direct input-to-output function that reacts to events or sequences within a well-mastered domain. In addition, humans and advanced intelligent machines accrue and utilize broader knowledge with some additional processing. I refer to this second level as standby knowledge.

Actions or outcomes based on this standby knowledge require processing and internal resolution, which makes it slower than instantaneous knowledge. However, it will be applicable to a wider range of situations. Humans and intelligent machines need to interact with vast amounts of world knowledge so that they can retrieve the information required to solve new tasks or increase standby knowledge. Whatever the scope of knowledge is within the human brain or the boundaries of an AI system, there is substantially more information outside or recently relevant that warrants retrieval. We refer to this third level as retrieved external knowledge.

Humans and intelligent machines must access all three levels of knowledge — instantaneous, standby and retrieved external knowledge — to balance scale and cost with the ability to complete a diverse range of tasks and to adapt continuously. To demonstrate, I will map some examples to the three levels of knowledge. Let’s apply them to two situations: a doctor prescribing two medications to a patient and a driver driving his car through a neighborhood.

· Instantaneous knowledge: A patient asks their doctor about administering a medication for a heart condition and a common pain-relief medicine. She approves it immediately ‘without needing to think’ because these two medications are co-administered routinely and safely. The response is immediate and automatic.

Similarly, when presenting his System 1 / System 2 model (based on Daniel Kahneman’s book “Thinking Fast and Slow”), Yoshua Bengio gave the example of a driver navigating his way on a very familiar route and without the need for much attention. The knowledge of the road, the route and the driving dynamics are all instantaneous. Bengio described this mode as being intuitive, fast, unconscious and habitual.

· Standby knowledge: A patient asks their doctor about administering a medication for a heart condition and a less commonly used lung infection medication. While the doctor never prescribed those two medications together before, she understands their underlying mechanisms and potential interactions. She needs to utilize her broader knowledge base and use reasoning to reach a conclusion about whether they can be administered together.

In the driver example, Bengio had him drive through an unfamiliar area with challenging road conditions. The driver now needs to consider all the visual input and apply his attention to navigating the situation safely. He called this System 2 thinking and characterized it as being slow, logical, sequential, conscious, linguistic, and algorithmic, with planning and reasoning.

· Retrieved external knowledge: A patient asks their doctor about administering a medication for a heart condition together with a new COVID-19 treatment that was just approved last month and has very recent studies that the doctor has not explore yet. The doctor might consult external knowledge as well as apply existing knowledge and skills to obtain pertinent additional information and guidance.

As for the driving example, the driver might have reached a block in the road. The driver might pull out his/her phone to look at the area map or get GPS directions to know how to proceed. This situation can only be resolved with the retrieval of additional external knowledge. Applying the 3LK terminology, we will say that they used the third level of retrieved external knowledge.

Combining these three levels of knowledge allows people to respond effectively to a wide range of tasks and situations — from fully mastered to obtaining and utilizing never-before-seen information. Many times, the scope and variety of tasks are too broad to be mastered and mastering a new set of tasks can be costly. Standby knowledge can substantially expand the range of responses with knowledge that is available for reasoning in novel situations. However, the amount of potentially relevant information is vast and changes as the world evolves and interests shift. In many real-life situations, a third level of retrieved external knowledge is needed.

Envisioning Thrill-K — Three Levels of Knowledge Architecture for Machine Intelligence

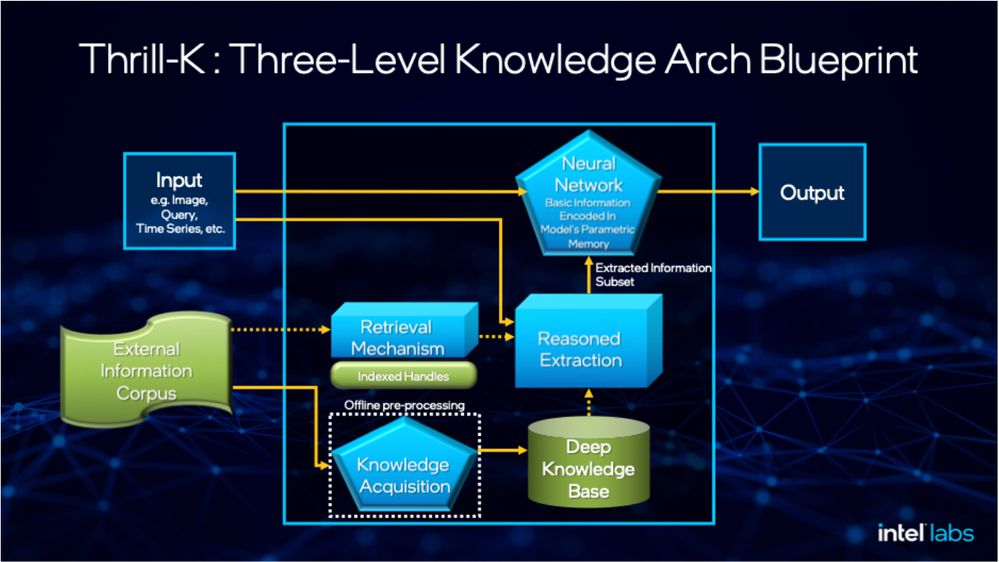

Thrill-K (pronounced “threel-kay”) is a proposed architectural blueprint for AI systems that utilize the three levels of knowledge (3LK). It provides a means for representing and accessing knowledge at three levels — in parametric memory for instantaneous knowledge, in an adjacent deeply structured knowledge base for reasoned extraction, and access to broad digital information repositories such as Wikipedia, YouTube, news media, etc.

In a series of articles on the classification of information-centric AI system architectures, I outlined three classes of information access and utilization: Systems with fully encapsulated information (e.g., recent end-to-end deep learning systems and language models like GPT-3), Systems with deeply structured knowledge (e.g., extraction from knowledge graphs like ConceptNet or Wikidata), and retrieval-based systems with Semi-structured adjacent information (e.g., retrieval from Wikipedia). In conclusion, Class 3++ was introduced, integrating all three levels of knowledge (see the section on “What AI System Architecture is best for the task?”). This inclusive Class 3++ architecture is what we refer to as the Three Level Knowledge (or Thrill-K) architecture.

Figure 2 provides the blueprint of tiered AI system that supports higher intelligence. This Thrill-K system diagram includes all the building blocks of such systems, however the flow (depicted by the arrows) can change based on the usage and configuration. In the example flow shown in the diagram, the sequence assumes NN, followed by KB, followed if needed by external resource. The direct input-to-output path using the instantaneous knowledge encoded in parametric memory. If it detects uncertainty or low confidence in the direct path, the system performs reasoned extraction from its deep knowledge base. This knowledge base relies on machine-learning-based knowledge acquisition to update and refresh the knowledge as new information becomes relevant and useful enough to be added. Finally, if the AI system cannot find the knowledge needed, the retrieval mechanism allows for accessing and retrieving necessary information from the available repositories. Other flows are also possible. For example, if the task of the AI is to search a KB or to find paragraphs in an external repository, the same building blocks will be configured in a different sequence.

It should be noted that while the main processing path is depicted here as a neural network, the same tiering principle will apply with other types of machine learning with information integrated into the processing as part of the instantaneous input-to-output path.

The volume of information within these levels of knowledge is expected to be on a different scale. The standby knowledge contains orders of magnitude more than the instantaneous knowledge, and the external knowledge eclipses the scope of internal standby knowledge. While the size of each knowledge level will depend upon the application, rough estimates of existing knowledge sources can provide insights into the scaling of information volume across the three levels.

For example, even a very large language model such as T5–11B, with its matrix of weights greater than 40 GB in size, is still 30x smaller than the total uncompressed size of a large, structured knowledge source such as Wikidata (1317 GB as of July 1, 2021). Wikidata in turn is 30x smaller than the 45 TB text corpus of books and web data used to train GPT-3. While data size is only a crude proxy for measuring information, a 30x scaling factor can serve as a lower bound for estimating how information volume increases at each subsequent level of knowledge in a Thrill-K system. This particular scaling factor is for language-only systems. However, since the knowledge structures for this architecture are designed to be inherently multimodal, this single-modality factor is a significant under-estimation of the actual scaling factor.

The Case for Efficiency as the Driver for Viable Solutions at Scale

As Homo sapiens evolved over the last few hundreds of thousands of years, their intellectual capabilities grew considerably. The amount of information and the range of tasks they must solve increased substantially. Some estimate that the size of the brain stayed about the same for the last 200,000 years, balancing its evolutionary value with burning 20 percent of our food at a rate of about 15 watts. What if the human brain had required a 10X increase in volume to support this new range? The difference is how efficiently the resources provided are used in the brain and in recent sub-structures like the neocortex.

Much of the research work in AI focuses on outcomes while playing down the efficiency of the models in terms of dataset size, specialized compute configurations, compute and energy cost, and environmental footprint. As a technology that is expected to permeate all industries, impact much of computing, and be deployed anywhere from large datacenters to devices on the edge, efficiency needs to be a primary consideration along with the achieved capabilities and outcome.

Size matters! Therefore, a future generative pre-trained Transformer 6 (GPT-6) language generator is not likely to be an AI solution that is broadly deployed and utilized. If the GPT approach continues into successive generations, it may evolve with an exponential growth in a number of parameters, dataset size, computing costs and more. Once it incorporates a multimodal representation of the world, starting with vision+language, it will have additional orders of magnitude of data and parameters.

Future models that require petabytes of data cost hundreds of millions of dollars to train and need massive compute systems to run inference will not be viable. The current trend of incorporating all potentially relevant information into the parametric memory sees models with over 1.5T parameters (e.g., Switch-C and Wu Dao) cannot continue for more than 2–3 years before it becomes rather esoteric due to affordability considerations.

Codifying knowledge in the direct path of rapid execution is expensive. Knowledge that is directly encoded with the input-to-output execution path burdens and expands that path. A language model applied in a forward-path that grows 100X in size to accommodate more instantaneous knowledge will be significantly more computationally expensive. There would be larger tensors to move and more tensor arithmetic to perform. While there are methods to decrease the amount of data movement and neural network computation (e.g., pruning, distillation, dynamic execution, and more), the clear overall trend continues with energy costs growing as NN model sizes increase.

Acknowledging the three levels of knowledge allows for shifting much of the information away from the NN parametric memory and into an adjacent knowledge graph (or even kept in large information repositories to be extracted when needed). Information and models that reside outside of the parametric memory are ‘passive’ insofar that they are not activated and do not need to expend any energy until and unless they are accessed. Note that this stratified approach is categorically different than fully-encapsulated architectures like GPT-3 which assumes that any information that might be used by the AI system must be encoded in its single-strata parametric memory.

For example, when answering questions about history, the AI does not need to spend any energy on a whole section of knowledge regarding geology which could stay dormant and passive in non-parametric memory. Moreover, during training, there is no need to expose the trained model to all the information that will reside in the non-parametric memory. There is no need to burden the training process with memorization of many facts and relations as long as the model can retrieve or extract the information if and when needed during test and inference time.

The principle of systems that have distinct levels of scale and efficiency seems to apply in many designed and evolutionary systems. For example, basic computer architecture had several levels of accessible information. The first level is operational/instantaneous in the dynamic CPU cache memory, which is readily available for use. Another level is the main memory, which is orders of magnitudes larger. Information is pulled from the main memory to the cache and executed as needed. Further away are disks and shared platform storage.

The amount of information is orders of magnitude more than the main memory and information is retrieved as needed. In each successive level, the capacity is at least 2–3 orders of magnitude higher, the cost of maintaining each piece of information when not in use is much lower, the latency for accessing information is longer (farther information is less expedient) and information is accessed only when required.

I believe that biological systems are using a similar ‘gear-based’ system to cover a broad range of scope-vs-efficiency tasks. Let’s look at the body’s use of sugars as an example. At the operational level, glucose is circulating in the bloodstream and is readily available to be turned into ATP and used as an energy source. Some of the energy derived from nutrition is converted into fat for long-term storage or into Glycogen to be stored in the liver and muscle cells. This storage can release energy back into muscles during exercise when additional energy is required. The third level is the external world, which is the ultimate source of energy for the body.

A system that has two internal ‘gears’ — operational/instantaneous and accessible in reserve can create a range of orders of magnitude between instantaneous-but-limited and extracted-and-large. Adding access to external resources adds a third gear which is slower to access but huge. The combination of three levels creates a very wide range of operation across internal and external resources.

Thrill-K Essential Contribution to Robustness, Adaptation and Higher Intelligence

While layering knowledge in three levels is essential for scale, cost, and energy, it is also required for increasing the capabilities provided by AI systems. This is viewed by evaluating the likely benefits of a Thrill-K system over an end-to-end DL system (referred to as a Class 1, Fully Encapsulated Information system

By definition, Thrill-K is a superset architecture that includes a capable NN and therefore any capability that is well served by NN can be accomplished by the extended system. Here are some capabilities that could be better supported by a Thrill-K system that integrates deeply structured knowledge for extraction, and access to external repositories.

· Improved multimodal machine understanding based on knowledge structures that capture multifaceted, object-centric semantics.

· Increased adaptability to new circumstances and tasks by extraction/retrieval of new information from repositories/knowledge base not available during pre-training or fine-tuning.

· Refined handling of discrete objects, ontologies, taxonomies, causal relations and broad memorization of facts

· Enhanced robustness from the use of symbolic entities and abstracted concepts

· Integrated commonsense knowledge which might not be directly present in the training dataset

· Enabled symbolic reasoning and explainability over explicitly structured knowledge

Conclusion: Three-Level Knowledge and its Manifestation as Thrill-K Machine Architecture

As stated before, the last decade saw a significant leap in AI capabilities by evolving and exploiting DL. While experiencing some disappointments and deployment setbacks, DL’s outstanding capabilities are about to significantly impact multiple industries and fields of study. However, the DL systems of today do not have the reasoning and high cognition that are required for many tasks, and for that we need to find a new approach.

AI is coming into the workplace, into homes, and cars and AI needs to be far more efficient and more capable of applying a higher level of autonomous reasoning closer to human-level. It needs to be adaptive and capable of perceiving, abstracting, reasoning, and learning. Imagine an AI system that goes well beyond statistical correlations, understands language, integrates knowledge and reasoning, adapts to new circumstances, and is more robust and customizable.

This is not artificial general intelligence or conscious machines, but instead more capable, cognitive machines that can reason over deep knowledge structures including facts, declarative knowledge, causal knowledge, conditional and contextual knowledge, and relational knowledge. Reaching the next level of machine intelligence will require a knowledge-centric neuro-symbolic approach that integrates the best of what NNs have to offer with additional constructs such as a hierarchy of knowledge with its associated complementary strengths.

By applying the 3-level knowledge hierarchy and Thrill-K system architecture, we can build systems and solutions of the future that are likely to partition knowledge at those three levels to create sustainable and viable cognitive AI. They include:

1) Instantaneous knowledge: knowledge commonly used and continuous functions that can be effectively approximated will reside in the fastest and most expensive layer, within the parametric memory for NN or other working memory for other ML processing

2) Standby knowledge: knowledge that is valuable to the AI system but not as commonly used, or requires increased representation strength for discrete entities or needs to be kept generalized and flexible for a variety of novel uses in an adjacent knowledge base with as-needed extraction

3) Retrieved external knowledge: the rest of the vast information of the world, which can stay outside the AI system as long it can be retrieved when needed.

Thrill-K offers a new blueprint for this type of future AI architecture. It will permeate AI architecture across systems and industries and offer a method for building intelligence effectively and efficiently. The next generation of AI architecture is upon us and we must work together to try new approaches so we can push the state-of-the-art into more capable and accountable AI systems for all.

References

· Singer, Gadi. “The Rise of Cognitive AI”. Towards Data Science, April 6, 2021.

· Kahneman, Daniel. “Thinking Fast and Slow”, Penguin Books, 2011.

· Bengio, Yoshua (11 December 2019) . “ From System 1 Deep Learning to System 2 Deep Learning”. Presentation at NeurIPS2019.

· Brown, Tom et al. “Language Models are Few-Shot Learners”.

arXiv:2005.14165, May 28, 2020

· Singer, Gadi. “Seat of Knowledge: Information-Centric Classification in AI”. LinkedIn, February 16, 2021.

· Singer, Gadi. “Seat of Knowledge: AI Systems with Deeply Structured Knowledge”. Towards Data Science, June 9, 2021.

· Singer, Gadi. “Seat of Knowledge: Information-Centric Classification in AI — Class 2”. LinkedIn, March 23, 2021.

· Robson, David. “A Brief History of the Brain”. New Scientist, September 21, 2011.

· Fedus, W., Zoph, B. and Shazeer, Noam. “SWITCH TRANSFORMERS: SCALING TO TRILLION PARAMETER MODELS WITH SIMPLE AND EFFICIENT SPARSITY”. arXiv:2101.03961, January 11, 2021.

· Feng, Coco. “US-China tech war: Beijing-funded AI researchers surpass Google and OpenAI with new language processing model”. South China Morning Post, June 2, 2021.

· Wasserblat, Moshe. “Best Practices for Text-Classification with Distillation Part (3/4) — Word Order Sensitivity (WOS)”. LinkedIn, June 8, 2021.

· Wasserblat, Moshe. “Best Practices for Text Classification with Distillation (Part 1/4) — How to achieve BERT results by using tiny models”. LinkedIn, May 17, 2021.

· Wasserblat, Moshe. “Best Practices for Text Classification with Distillation (Part 2/4) — Challenging Use Cases”. LinkedIn, May 26, 2021.

· Ye, Andre. “You Don’t Understand Neural Networks Until You Understand the Universal Approximation Theorem”. Analytics Vidhya, June 30, 2020.

· Singer, Gadi. “ Understanding of and by Deep Knowledge”. Towards Data Science, May 6, 2021.

· Mims, Christopher. “Self-Driving Cars Could Be Decades Away, No Matter What Elon Musk Said” Wall Street Journal, June 5, 2021.

This article builds on insights presented on earlier articles:

Understanding of and by Deep Knowledge

And a previous series published on LinkedIn:

Age of Knowledge Emerges:

Part 1: Next, Machines Get Wiser

Part 2: Efficiency, Extensibility and Cognition: Charting the Frontiers

Part 3: Deep Knowledge as the Key to Higher Machine Intelligence

Cognitive Computing Research: From Deep Learning to Higher Machine Intelligence

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.