Reacting to data in real-time: how to set the tone for enterprise success

Could stream processing allow businesses to leverage their data in real-time?

The ability to instantly process and get value from data — whatever it looks like, whatever size it has and wherever it lives — continues to redefine every single industry vertical today.

Easier said than done, this makes it critical for enterprises to gradually abandon outdated legacy systems and ease into a technology architecture that allows them to unlock the value in data as it is produced and put it to good business use in less than no time. It is only fair to say that data has taken the enterprise landscape by storm: from financial services and retail, all the way to healthcare and transportation.

As we consider the future, the ability to react to data-in-the-moment and engage with customers in real-time will be what dictates success, or failure, for many organisations.

- A new era in data awareness

- Protecting data with multi-party computation

- Securing the future of the data sharing economy

What is stream processing?

Stream processing, or the processing of data “in motion”, is imprinted in the DNA of large-scale tech companies like Facebook, Google, Netflix and Uber. These businesses are continuously redefining and improving their operations - from how they cater for improved customer experience to how they can stay ahead of the competition on that basis. This has propelled the stream processing ecosystem to mature and grow at lightning fast speed. So, what should any big business be doing to keep up with it?

Stream processing technologies have become fairly mainstream in the enterprise by now, successfully moving outside the scope of just tech companies. There are already signs of strong adoption even from verticals you would hardly consider at first thought, such as agriculture or manufacturing. Indeed, analysts have already predicted that the streaming data applications market will reach more than $13 billion by 2021.

In financial services especially, stream processing and a streaming data architecture can enable the implementation of any required changes to business models and processes in an agile and cost-efficient manner. By adopting such an architecture, these companies can completely transform how they react to information: in a way that brings insight to the organisation in real-time and allows building 24/7, data-driven applications that keep track of and act upon changes or alerts instantly. Other use cases include real-time fraud detection and response to cybersecurity threats, as well as tailored and personalised product offerings based on real-time data, with the added value of natural simplification and automation of regulatory compliance requirements.

Evolution of stream processing

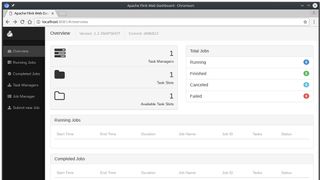

This is when technologies like Apache Flink, the fastest growing open source stream processing engine available today, become key. Concrete evidence of this surge in popularity of stream processing is the sheer amount of available options these days, and the fact that most data companies have now adapted to include at least one stream-oriented product in their commercial offering.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

A big icebreaker was the introduction of SQL support, the de-facto language for data handling, in these products, along with other implementations that make such frameworks more user-friendly and usable by a wider audience, such as data and business analysts. If you are wondering how to choose between all available options, it really boils down to how strict your requirements are regarding latency, throughput and data correctness.

The evolution of stream processing will to some extent decommission the idea of otherwise popular Lambda architectures — for a big share of use cases, though not all —, that enforce a distinction between batch, or data at rest, and stream data processing. The emerging Kappa architectures, where batch data is processed as a special case of streaming, entered the picture to simplify codebase complexity and maintenance, and might salvage some of the operational burdens of maintaining separate systems for data processing.

The right choice of technology and approach can go a long way in enabling companies to achieve business ROI much quicker and streamline the development and rollout of distributed, real-time applications. As an example, after one year of Apache Flink being in production at Alibaba, the company reported a 30 percent increase in conversion rate during Singles Day 2016 — the popular annual shopping holiday — with $25 billions worth of merchandise sold in a single day.

Getting ahead of the curve

As data gets bigger, faster and more complex, enterprises need to step up their game and realise that smart investments in modernising their data infrastructure lie not in the amount of money spent, but in how much time is dedicated to finding the right fit.

Stream processing is a widespread paradigm that makes it easier than ever to take advantage of the benefits of real-time data processing regardless of your technical expertise, enabling a wide range of mission-critical use cases.

Enterprises should get involved early on to develop the appropriate talent, skillset and operations that will drive and propel their chances of staying ahead of the curve.

Marta Paes Moreira, Product Evangelist at Ververica (formerly data Artisans)

- Make the most of your data with the best data visualization tools

Marta is a Technology Evangelist at Ververica, the original creators of the industry-leading Event Stream Processing framework Apache Flink. As an enthusiast of cutting-edge technology and a restless learner, she is embracing the challenge of portraying engineering products through the eyes of the customer, after working as a developer in the fields of e-commerce (Zalando) and data consulting (Unit4, Accenture).

She has over 1 year of working experiences.